Today, we're sharing our monumental journey of migrating 1.5 billion documents from MongoDB to DynamoDB to give our email tracking system a modern makeover.

Our email tracking system, first launched in 2015, was in dire need of an upgrade. Originally built on a monolithic Mongo database, we recorded every email open with details like timestamp, IP address, and HTTP user agent.

As our user base grew, so did our MongoDB collection, eventually reaching a staggering 1.5 billion records (even after culling old records). Queries, especially aggregations to show email opens in Gmail’s 'sent' view, became intolerably slow, taking more than 200ms. Our indexes outgrew RAM capacity, and the financial cost of maintaining such a large MongoDB instance was becoming unsustainable.

Why DynamoDB?

Our quest for a better solution led us to AWS DynamoDB. The key reasons for our choice were its seamless integration with the AWS ecosystem, near-infinite scalability, consistent performance irrespective of data size or query volume, and the pay-as-you-go pricing model. Our data's key/value nature made DynamoDB a perfect fit. We were also interested in exploring features like DynamoDB streams and global tables.

DynamoDB Data Model

Adopting DynamoDB meant embracing a new data model. We had to denormalize our data since DynamoDB doesn't support aggregations (because of its constant-response-time guarantee). Our new model uses a composite key system with the primary key "PK" being the unique message ID and the secondary key "SK" describing the data type. This approach ensures efficient data access and eliminates duplicate entries.

```

// Messages Mongo collection

{

_id: '3MpYjFAH0wAIVR22v',

to: [{email: 'test@mixmax.com'}, {email: 'other@mixmax.com'}],

};

// Opens mongo collection

{

messageId: '3MpYjFAH0wAIVR22v',

email: 'test@mixmax.com',

timestamp: '2023-06-14T16:55:29.214Z',

userAgent: 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 ',

referrer: '',

},

{

messageId: '3MpYjFAH0wAIVR22v',

email: 'other@mixmax.com',

timestamp: '2024-06-15T17:06:49.549Z',

userAgent: 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 ',

referrer: '',

};

```

[our old Mongo data model]

[our new Dynamo DB data model]

Rolling Out the New System

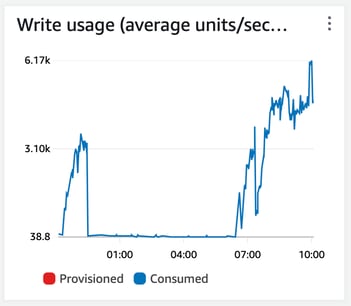

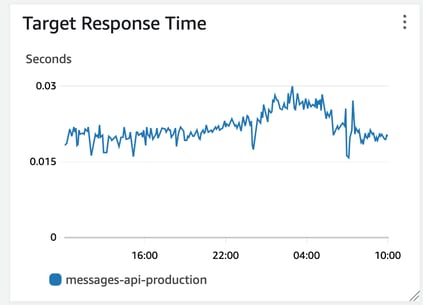

Implementing the new system was a massive undertaking. We began by writing new data to both MongoDB and DynamoDB. A week-long migration transferred all 1.5 billion documents to DynamoDB, with the database easily handling 10k insertions per second. During the migration, we were surprised that reads in Dynamo not only didn’t get slower — it got faster:

See this explanation. After confirming the data was in sync, we updated our UI to read from DynamoDB and eventually decommissioned the MongoDB setup.

Conclusion: A Scalable Future with DynamoDB

The transition to DynamoDB has been a resounding success. We now have a scalable, hands-off solution that guarantees consistent response times for our users, even with increasing data volumes. Encouraged by this success, we're planning to shift more of our core data stores to DynamoDB.

Interested in contributing to Mixmax DynamoDB? Visit Mixmax Careers.