This is the next post in a series on how we scaled Mixmax from a successful prototype to a platform that scales to many thousands of users. In this post, I’ll describe some of the front-end performance bottlenecks we experienced while using the Meteor framework and why we had to move one part of our app off it.

We value performance deeply at Mixmax. It’s also very important to our users. Since Mixmax is deeply integrated within Gmail, our users expect it to behave exactly like the built-in Gmail UI. Our users should never see a loading spinner using Mixmax inside of Gmail, just as you’d never see a loading spinner inside of Gmail itself.

First, a quick primer on Mixmax architecture: Mixmax is a Chrome Extension that replaces your Gmail new email compose window with its own editor, loaded using an iframe. The reason we use an iframe is to keep our code and CSS entirely separate from Gmail and to minimize the failure-prone integration points with Gmail. However, as an iframe, we incur a network round trip cost every time you click the Compose button. That’s why load time and initial rendering performance are absolutely critical to our user experience.

Load time performance was one of our biggest performance complaints. Our time to first render was over 8 seconds (90th percentile). Just having finished migrating our backend to a new microservices architecture, it was now time to rethink the front-end.

Performance Analysis

The core metric that we wanted to optimize for was “time to first render”. Specifically, this is the time it takes between when the server first receives the request to when the user sees the Compose window UI. This time can be broken down into several loading segments: the time spent processing the request server-side, the time loading external Javascript client-side, and the time from the DOMContentLoaded event (once all initial Javascript is run) until the view is rendered.

Step 1. Time to first byte

Our 90th percentile time to first byte (TTFB) time is 800ms, which is quite fast given that it includes network round trip time of the request and the start of the response. The actual time spent processing server-side is always less than 50ms; the rest is pure network, likely due to the fact that we're only hosted on the US east coast but have a worldwide audience. We measured this using the following code that sent our TTFB time to Keen.io for processing:

|

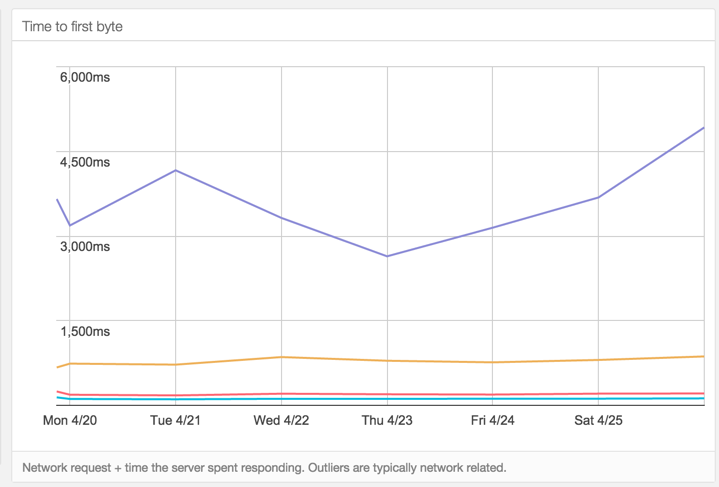

Here is the data from our internal analytics dashboard (built using Keen’s API) of the 98th, 90th, 50th, and 20th percentile TTFB load times:

This is understandably fast since all the server is doing is creating a new Mixmax message object in the database, inlining it in the page (using the Meteor fastrender package), and then returning the boilerplate Meteor HTML page.

Step 2. Loading external Javascript

The 90th percentile load time between TTFB and DOMContentLoaded was 5 seconds (90th percentile). This is atrociously slow compared to most other web apps. We had our work cut out here; we needed to examine this segment closely.

This segment can be further broken down into two areas:

a) Network time loading Javascript

We currently load our app’s Javascript from a CDN. It is permacached based on its GIT SHA (version number). However, since we push a new version of the app every day, a new app Javascript file gets built and users need to download it again every morning. It’s also a quite large file - currently 391kb after automatic gzipping - because Meteor includes all templates and libraries in one bundle even if they’re not used. This is actually OK for now since the library is often preloaded and then permacached until our next deploy. However, it might be an issue if a user wakes up in the morning (after our deploy) on a slow connection, launches Gmail, and then has to wait for the 391kb of Javascript to load. They’ll be waiting for a while.

b) Executing the Javascript

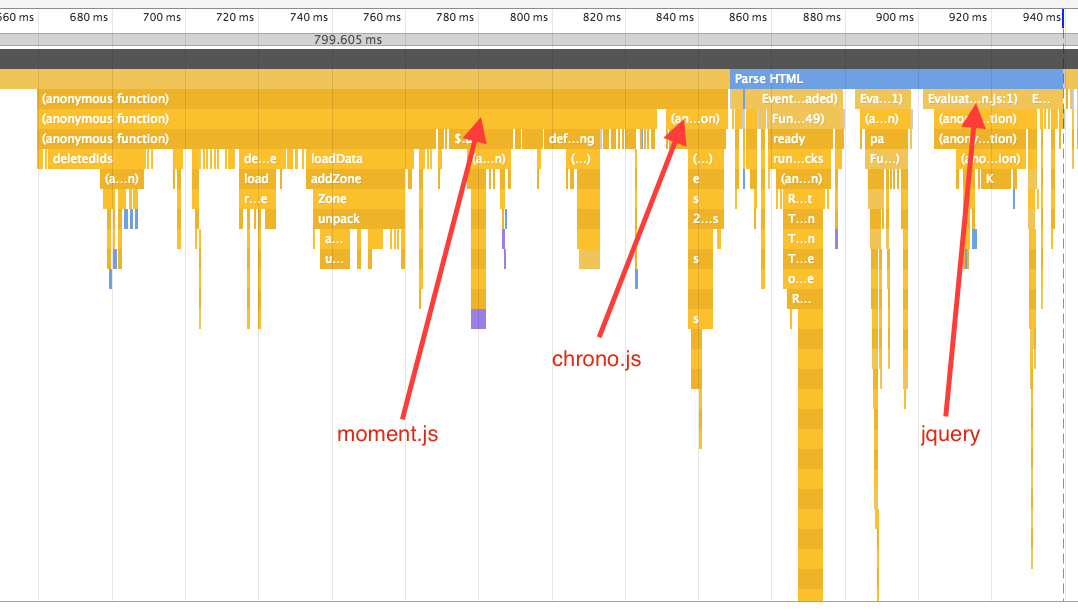

After the app Javascript is downloaded, the browser executes it. This is where way too much time was being spent. Here is the Chrome Web Inspector flame graph of our app bootup:

We were spending almost 400ms in pure Javascript, tested on a brand new fast MacBook. So it’s probably several seconds on the average computer. The top bottlenecks are:

- Initializing moment-timezone.js - 171ms

- Initializing jquery - 30ms

- Initializing chrono.js - 20ms

- Initializing Iron Router - 20ms

This is pure CPU time executing the Javascript and bootstrapping these libraries. We needed to put in a lot of work here!

Step 3. From DOMContentLoaded to when the compose view gets rendered

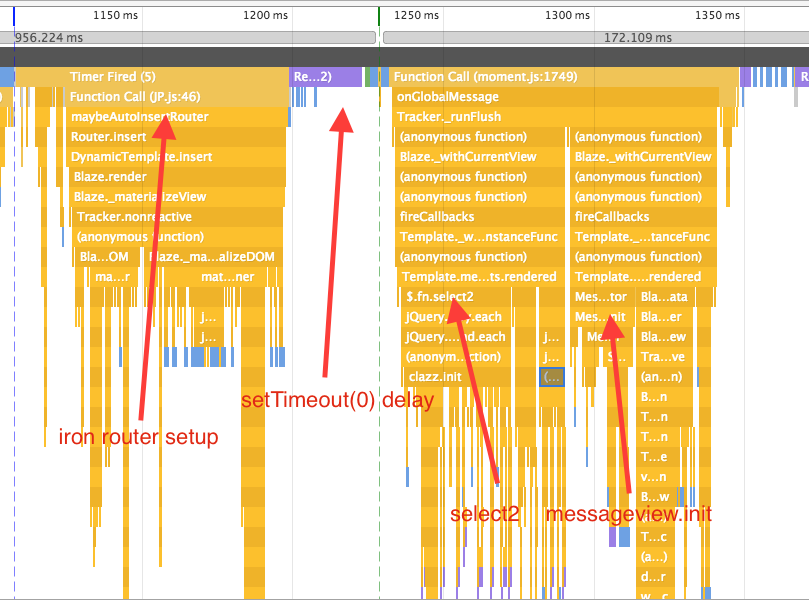

After the initial libraries are loaded and the initial Javascript is executed, the next thing that runs is the DOMContentLoaded event. This part adds about 200ms to render the view. Here’s a breakdown:

As you can see here, the bottlenecks are:

- Setting up Iron Router (and Meteor Blaze templates) - 75ms. This is where Meteor is setting up data structures for its Blaze templates.

- setTimeout(0) delay - 50ms. Meteor’s Blaze engine has its own internal event loop that uses a setTimeout to wait for the next cycle of the Javascript event loop to start rendering templates. This setTimeout on start gives the browser the opportunity to relayout the DOM needlessly, costing about 50ms of load time.

- select2 initialization - 40ms. This is used for our autocomplete ‘to’ field.

- messageview initialization - 25ms. Needed for our own UI (the message view) to render.

How did we fix this?

We came up with three primary goals that would help us address these performance problems:

1. Only load libraries when they’re needed

We needed to reduce our overall Javascript size (Step 2, Part 1) as well as eliminate the expensive execution time (Step 2, Part 2). For example, moment-timezone.js and chrono.js are collectively almost 200ms just to run on page load. They’re also not used in the initial rendering of the page. We should lazily load these only when they’re needed.

2. Move off Meteor’s Blaze & Iron Router

Meteor Blaze takes about 75ms just to set up its data structures for templates. Additionally, it uses a setTimeout call before rendering its first view. While it’s a setTimeout for '0' ms, it still waits for the Javascript task queue to be exhausted, which depending on other resources loaded might be anywhere from 10ms to 500ms. This can all be avoided by rendering templates directly into the DOM on page load and not using Meteor.

3. Use server-side rendering

Most of the compose window is static anyways, so there’s no reason we need to render it client-side. We should be able to render the basic view (to, cc, bcc, and message fields) on page load and not even have to wait for the Javascript to be loaded.

New architecture

Given the above findings of too much Javascript slowing us down (in both network and execution time), we decided to move our compose window off of Meteor entirely. While Meteor one day might support server-side rendering and other page load optimizations, it’s currently not the right framework to use when loading performance is critical to your app's experience. We are however keeping the rest of our app (such as the Mixmax Dashboard) on Meteor.

We chose to architect this new service as close to the metal and bare bones as possible. So we chose a basic Express app with a Backbone front-end. Our new architecture has the following characteristics:

Server-side rendering

We render the entire HTML for the compose window server-side in Express. We even fill it out with as much data as possible (such as the user’s email address) so the user sees a complete UI even before Javascript loads. Then, when the Javascript is finally downloaded and executed, it simply attaches to the server-side-rendered DOM. Backbone makes this easy by offering several usage patterns to attach a view to an element.

Load libraries separate from application source

In the Meteor world, most packages bring a library with them. Meteor even packages the jQuery source with its own core source. With our new custom architecture, we were able to load all our libraries from popular CDNs where it’s highly likely that the user already has them cached. Additionally, when we push a new version of our app, the users don't need to re-download these libraries. The <head> code looks like this:

|

Lazily load anything and everything we can

As we found out in our analysis, some libraries such as moment.js and chrono.js are very expensive to load. Fortunately, they aren’t required for first render to show anything meaningful to the user. So we are loading them using async script tags at the very end of the page, loaded from a CDN of course:

|

Chrome also recently made loading async scripts even faster.

Results

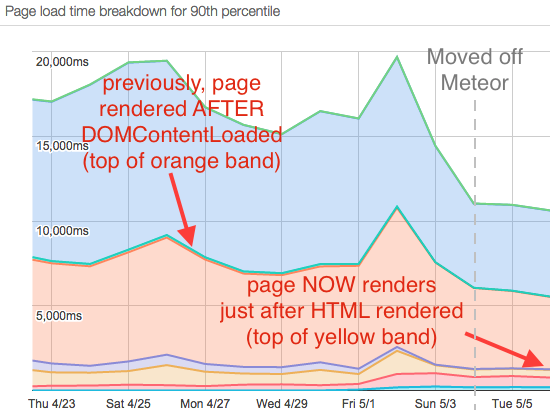

Moving to the new architecture was a huge improvement. Our time to first render dropped considerably, from almost 8sec (90th percentile) down to about one second. We also already received great feedback from our users. Some even said it loaded quicker than Gmail’s own compose window.

We certainly miss Meteor in our compose window: reactivity on the front-end, its useful local development toolchain, and the plethora of great packages. But we’ve been able to find or build equivalents to those in our new world of Express and Backbone. However, we're still using Meteor for our application dashboard where page load time isn't as important.

We’ll continue to publish followup posts about our journey scaling Mixmax from a successful prototype to a product that scales to many thousands of users. Try it today by adding Mixmax to Gmail.

Want to work on interesting problems like these? Email careers@mixmax.com and let’s grab coffee!